Saumya Malik

Predoctoral Researcher at the Allen Institute for Artificial Intelligence

saumyam [at] allenai [dot] org

About Me

I am applying to PhD programs in the 2025-2026 cycle!

Hi! I'm Saumya, a predoctoral young investigator on the AllenNLP and Olmo team at the Allen Institute for Artificial Intelligence (Ai2), advised by Nathan Lambert. Before that, I got my undergraduate degree at Princeton University in 2024, majoring in Computer Science and minoring in Linguistics and Cognitive Science. I was very fortunate to work with Professor Danqi Chen.

I do research in NLP, and I'm broadly interested in improving our understanding of the interplay and sequencing of training stages, data decisions, and evaluation signals to build language models that are more capable but also more controllable and aligned for people.

Check out some of my work on open language modeling, reward models, and instruction following below or on my Google Scholar!

Publications and Preprints

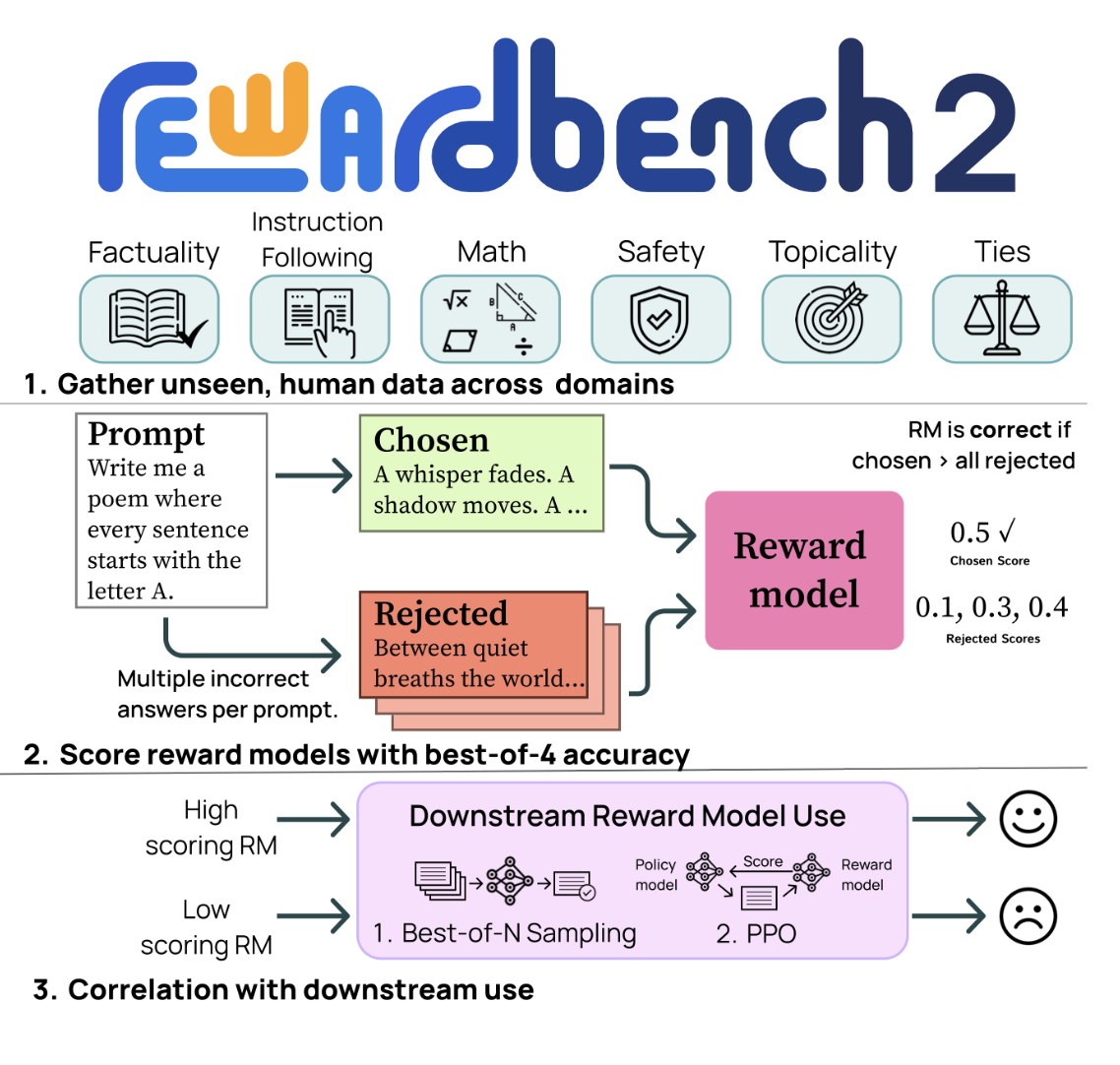

RewardBench 2: Advancing Reward Model Evaluation

ICLR, 2026

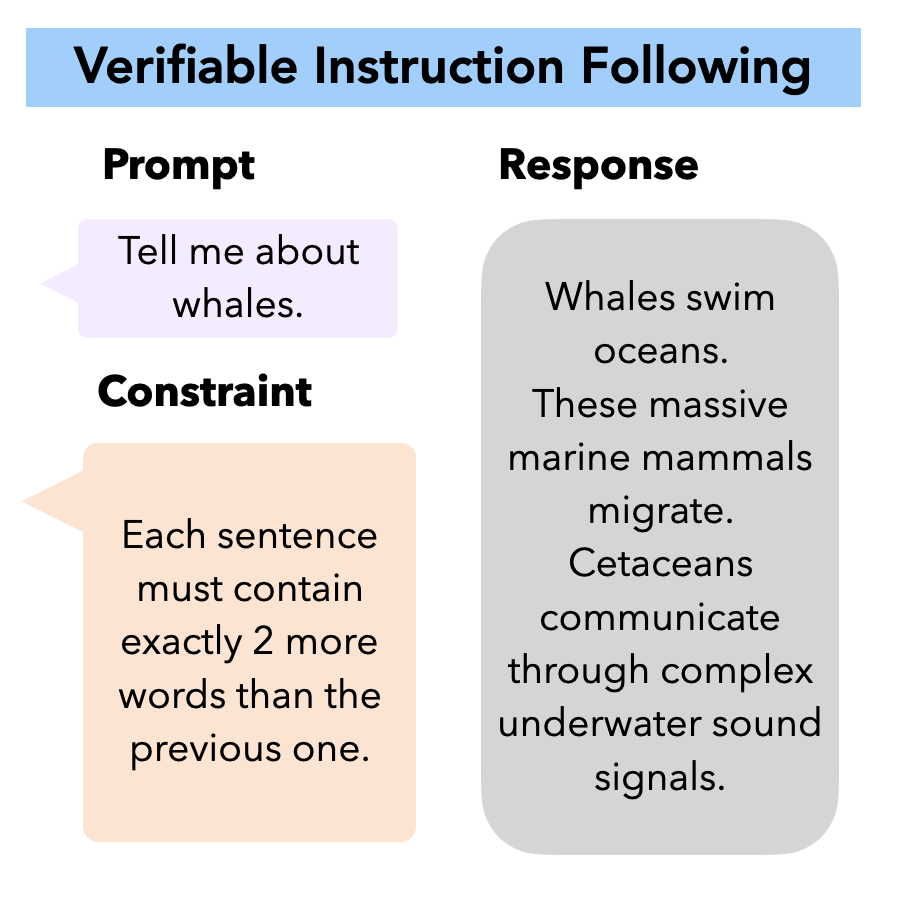

Generalizing Verifiable Instruction Following

NeurIPS Datasets & Benchmarks, 2025. Also one of 10 benchmarks adopted in the Artificial Analysis Intelligence Index!